Step up your coding game with AI-powered Code Explainer. Get insights like never before!

Introduction

Dimensionality reduction through feature extraction aims to transform the original features into a new set while retaining most of the underlying information in the new collection. Consequently, we can minimize the number of components in our data while maintaining high accuracy in our predictions.

In this tutorial, we'll go through various feature extraction methods. The new features we create due to our discussed feature extraction approaches aren't human-interpretable. As much or nearly as much training power will be available, they will look to the human sight as a random collection of numbers. Dimensionality reduction by feature selection is a preferable approach for keeping models interpretable.

Table of content:

- Introduction

- Reducing Features Using Principal Components

- Reducing Features When Data Is Linearly Inseparable

- Reducing Features by Maximizing Class Separability

- Reducing Features Using Matrix Factorization

- Reducing Features of Sparse Data

- Conclusion

Let's import the necessary libraries:

from sklearn import datasets

from sklearn.discriminant_analysis import LinearDiscriminantAnalysis

from sklearn.decomposition import PCA, KernelPCA

from sklearn.datasets import make_circles

from sklearn.preprocessing import StandardScaler

from sklearn.decomposition import PCA

from sklearn.decomposition import NMF

from sklearn.decomposition import TruncatedSVD

from scipy.sparse import csr_matrix

import numpy as npReducing Features Using Principal Components

Principal Component Analysis (PCA) consists of computing the principal components and using them to perform a change in the basis of the data. It is nothing more than a lower-dimensional projection of a higher-dimensional object.

Even though it seems confusing, watching TV shows often involves seeing 2D projections of 3D objects. Our goal is to have as much information preserved as feasible in a projection. Finding the object's longest axis and then rotating it around it to discover its second-longest axis is a sound method.

This tutorial provides a concise, step-by-step explanation of how PCA works. Given a collection of features, suppose you want to reduce the number of features while retaining the data's variance:

# Load the data

digits = datasets.load_digits()

# Feature matrix standardization

features = StandardScaler().fit_transform(digits.data)

# Perform PCA While retaining 80% of variance

pca = PCA(n_components=0.95, whiten=True)

# perform PCA

pcafeatures = pca.fit_transform(features)

# Display results

print("Original number of features:", features.shape[1])

print("Reduced number of features:", pcafeatures.shape[1])Original number of features: 64

Reduced number of features: 40The PCA() class from scikit-learn is used to implement PCA. Depending on the parameter, n_components has two operations. If the parameter is higher than one, n_components will return the number of features specified. This raises the challenge of how to choose the best amount of features.

Fortunately, if the n_components option is between 0 and 1, PCA delivers the fewest features that preserve that much variance. It is customary to utilize values of 0.95 and 0.99 which signifies that 95% and 99% of the variance of the original features have been kept. When whiten=True, the values of each principal component are transformed to have a zero mean and unit variance. Another argument is svd_solver="randomized" which uses a stochastic technique to discover the initial main components in less time.

Reducing Features When Data Is Linearly Inseparable

You can have linearly inseparable data, and you want to minimize the dimensions. In this case, you can use a kernel-based extension of principal component analysis. PCA may help us to simplify our feature matrix by reducing its dimensionality (e.g., the number of features).

Linear projection is used in standard PCA to minimize features; plotting a straight line or hyperplane across distinct data classes is an excellent use of PCA. Linear transforms may not work if your data is not linearly separable (e.g., you can only separate classes using a curved decision boundary).

Our approach generated a simulated dataset with a target vector of two classes and two features using scikit-learn's make_circles() function. This function creates linearly inseparable data, i.e., one class is entirely encircled by the other class.

If we employed linear PCA to decrease the dimensionality of our data, the two classes would be linearly projected onto the first principal component. Ideally, we'd want a transformation that reduces the dimensions while making the data linearly separable. KernelPCA is capable of both; the kernel approach allows us to project linearly inseparable data onto a higher dimension that is linearly separable. Don't worry if you don't grasp the kernel trick's specifics; simply think of kernels as alternative methods of projecting data.

# Creation of the linearly inseparable data

features, _ = make_circles(n_samples=2000, random_state=1, noise=0.1, factor=0.1)

# kernal PCA with radius basis function (RBF) kernel application

k_pca = KernelPCA(kernel="rbf", gamma=16, n_components=1)

k_pcaf = k_pca.fit_transform(features)

print("Original number of features:", features.shape[1])

print("Reduced number of features:", k_pcaf.shape[1])Original number of features: 2

Reduced number of features: 1We may utilize a variety of kernels in scikit-learn's KernelPCA by specifying the kernel argument. The Gaussian radial basis function kernel rbf is a popular choice, although additional alternatives include the polynomial kernel (poly) and the sigmoid kernel (sigmoid). We may also provide a linear projection (linear) to get the same results as regular PCA.

Because of the many parameters that must be specified when using kernel PCA, it has one drawback. When n_components is set to 0.95, PCA selects the number of features that maintain 95% (or more) of the variance. Kernel PCA does not provide this option. As an alternative, we must provide the number of parameters (n_components=1).

Kernels have their own hyperparameters, such as the radial basis function, which requires a gamma value. So, how do we know what values to use? We can train our machine learning model numerous times using a different kernel or parameter. Once we identify the best combination of parameters, we're done forecasting the best results.

Reducing Features by Maximizing Class Separability

LDA is a standard method for reducing the number of dimensions in a dataset. Using a technique similar to principal component analysis (PCA), LDA minimizes the size of our feature space. Unlike PCA, which focuses only on maximizing data variance, LDA seeks to maximize the differences between classes:

#flower dataset loading:

iris = datasets.load_iris()

features = iris.data

target = iris.target

# Creation of LDA. Use of LDA for features transformation

lda = LinearDiscriminantAnalysis(n_components=1)

features_lda = lda.fit(features, target).transform(features)

# Print the number of features

print("number of features(original):", features.shape[1])

print("number of features that was reduced:", features_lda.shape[1])Original number of features: 4

Reduced number of features: 1We may use explained_variance_ratio_ to see how much variance each component explains. Here, a single component accounted for more than 99% of the variance:

lda.explained_variance_ratio_array([0.9912126])In scikit-learn, LinearDiscriminantAnalysis() is used to perform LDA, and the n_components argument specifies how many features we want to get as a result of the analysis. We may leverage the fact that explained_variance_ratio_ provides us the variance explained by each outputted feature and is a sorted array to figure out what n_components argument value to use (e.g., how many parameters to maintain).

LDA may be used to retrieve the ratio of variance explained by each component feature and then compute how many components are needed to achieve beyond a certain threshold of variance explained (typically 0.95 or 0.99). To achieve this, we must set n_components to None.

# Load Iris flower dataset:

iris123 = datasets.load_iris()

features = iris123.data

target = iris123.target

# Create and run LDA

lda_r = LinearDiscriminantAnalysis(n_components=None)

features_lda = lda_r.fit(features, target)

# array of explained variance ratios

lda_var_r = lda_r.explained_variance_ratio_

# function ceration

def select_n_c(v_ratio, g_var: float) -> int:

# initial variance explained setting

total_v = 0.0

# number of features initialisation

n_components = 0

# If we consider explained variance of each feature:

for explained_v in v_ratio:

# explained variance addition to the total

total_v += explained_v

# add one to number of components

n_components += 1

# we attain our goal level of explained variance

if total_v >= g_var:

# end the loop

break

# return the number of components

return n_components

# run the function

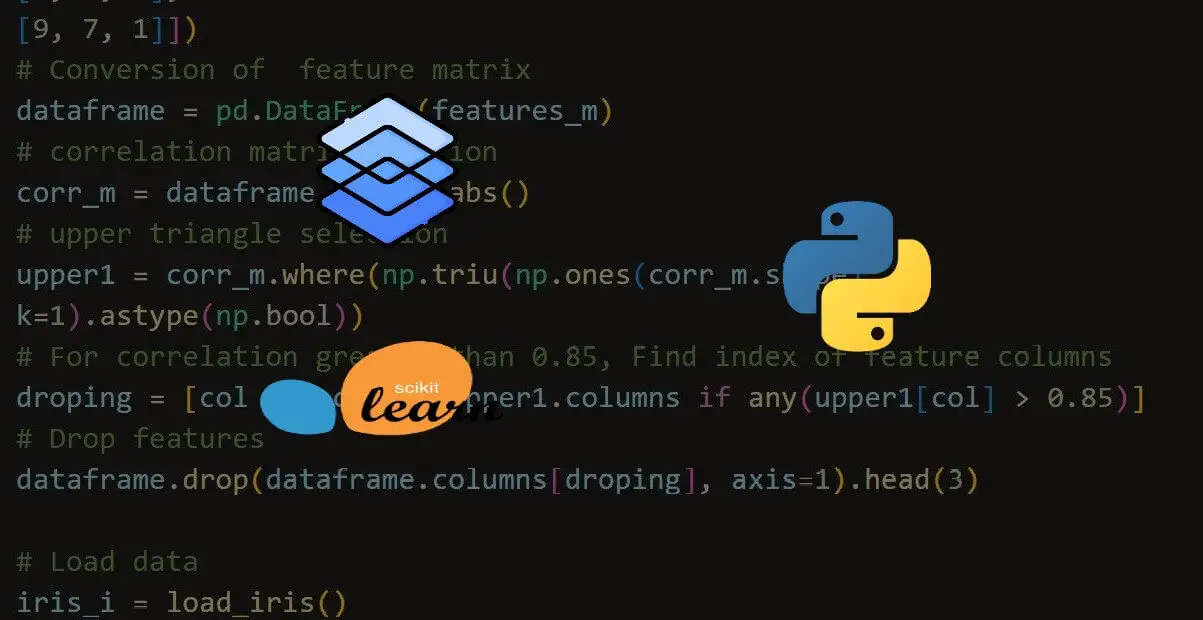

select_n_c(lda_var_r, 0.95)1Reducing Features Using Matrix Factorization

To lower the dimensionality of the feature matrix, we can use non-negative matrix factorization (NMF). NMF is an unsupervised algorithm for linear dimensionality reduction that factorizes (i.e., divides the feature matrix into numerous matrices whose product approximates the original matrix) the feature matrix into matrices expressing the latent link between observations and their features.

Intuitively, NMF may minimize dimensionality because, in matrix multiplication, the two components (matrices being multiplied) might have much fewer dimensions than the resulting matrix. NMF factors our feature matrix in the following way, given the desired number of returned features, r:

V ≈ W . H

Vis our(d, n)feature matrix (dfeatures,nobservations),Wis a(d, r)matrix, andHis a(r, n)matrix.- We may specify the amount of dimensionality reduction wanted by adjusting the value of

r. - A critical condition of NMA is that the feature matrix cannot have negative values. Furthermore, unlike PCA and other approaches we've looked at, NMA doesn't provide us with the explained variance of the outputted features. Consequently, the most straightforward approach for us to identify the ideal number of

n_componentsis to experiment with various values until we find the one that delivers the best results in our final model. You can follow the below code:

# data loading

digit = datasets.load_digits()

# feature matrix loading

feature_m = digit.data

# Creation, fit and application of NMF

n_mf = NMF(n_components=12, random_state=1)

features_nmf = n_mf.fit_transform(feature_m)

# Show results

print("Original number of features:", feature_m.shape[1])

print("Reduced number of features:", features_nmf.shape[1])Original number of features: 64

Reduced number of features: 12Reducing Features of Sparse Data

Truncated Singular Value Decomposition (TSVD) is used here for sparse data. TSVD is related to PCA, and PCA often uses non-truncated Singular Value Decomposition (SVD) as one of its processes. Given d features, standard SVD will generate (d, d) factor matrices, while TSVD would generate (n, n) factors, where n is previously defined by a parameter.

TSVD has the practical benefit of working on sparse feature matrices, as opposed to PCA. One disadvantage of TSVD is that, due to the way it employs a random number generator, the output signs might flip between fittings. fit() should only be used once per preprocessing pipeline, then transform() several times.

# data loading

digit123 = datasets.load_digits()

# feature matrix Standardization

features_m = StandardScaler().fit_transform(digit123.data)

# sparse matrix creation

f_sparse = csr_matrix(features_m)

# TSVD creation

tsvd = TruncatedSVD(n_components=12)

# sparse matrix TSVD

features_sp_tsvd = tsvd.fit(f_sparse).transform(f_sparse)

# results

print("Original number of features:", f_sparse.shape[1])

print("Reduced number of features:", features_sp_tsvd.shape[1])Original number of features: 64

Reduced number of features: 12We must define the number of features (components) to be outputted, the same as we did with linear discriminant analysis. This is accomplished via the use of the n_components argument.

The next logical issue is: what is the optimal number of components? One approach is to add n_components as a hyperparameter to optimize during model selection (i.e., pick the n_components value that provides the best-trained model).

Alternatively, since TSVD gives us the ratio of the variance explained by each component in the original feature matrix, we may choose the number of components that explain the desired amount of variance (95% or 99% are typical values). For example, in our approach, the first three outputted components explain roughly 30% of the variance in the original data.

# Sum of first three components' explained variance ratios

tsvd.explained_variance_ratio_[0:3].sum()Conclusion

In this tutorial, we have seen how we can use the following feature extraction techniques to perform dimensionality reduction.

To conclude, PCA is the most common technique in dimensionality reduction using feature extraction. However, there are many cases where you want to use other methods:

- When your data is linearly inseparable, use KernelPCA.

- If you're going to maximize the class separability, the LDA technique can be used to perform the job.

- Non-negative Matrix Factorization (NMF) is also helpful for performing matrix factorization techniques.

- Test out the Truncated Singular Value Decomposition (TSVD) method when your data is sparse.

You can get the complete code here or in the Colab notebook.

Read also: K-Fold Cross Validation using Scikit-Learn in Python.

Happy learning ♥

Just finished the article? Why not take your Python skills a notch higher with our Python Code Assistant? Check it out!

View Full Code Explain My Code

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!