Ready to take Python coding to a new level? Explore our Python Code Generator. The perfect tool to get your code up and running in no time. Start now!

Recurrent Neural Networks (RNNs) are very powerful sequence models for classification problems. However, in this tutorial, we are doing to do something different, we will use RNNs as generative models, which means they can learn the sequences of a problem and then generate entirely a new sequence for the problem domain.

After reading this tutorial, you will learn how to build an LSTM model that can generate text (character by character) using TensorFlow and Keras in Python.

Note that the ultimate goal of this tutorial is to use TensorFlow and Keras to use LSTM models for text generation. If you want a better text generator, check this tutorial that uses transformer models to generate text.

In text generation, we show the model many training examples so it can learn a pattern between the input and output. Each input is a sequence of characters and the output is the next single character. For instance, say we want to train on the sentence "python is a great language", the input of the first sample is "python is a great langua" and output would be "g". The second sample input would be "ython is a great languag" and the output is "e", and so on, until we loop all over the dataset. We need to show the model as many examples as we can grab in order to make reasonable predictions.

Related: How to Perform Text Classification in Python using Tensorflow 2 and Keras.

Getting Started

Let's install the required dependencies for this tutorial:

pip3 install tensorflow==2.0.1 numpy requests tqdmImporting everything:

import tensorflow as tf

import numpy as np

import os

import pickle

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, LSTM, Dropout

from string import punctuationPreparing the Dataset

We are going to use a free downloadable book as the dataset for this tutorial: Alice’s Adventures in Wonderland by Lewis Carroll. But you can use any book/corpus you want.

These lines of code will download it and save it in a text file:

import requests

content = requests.get("http://www.gutenberg.org/cache/epub/11/pg11.txt").text

open("data/wonderland.txt", "w", encoding="utf-8").write(content)

Just make sure you have a folder called "data" exist in your current directory.

Now let's define our parameters and try to clean this dataset:

sequence_length = 100

BATCH_SIZE = 128

EPOCHS = 30

# dataset file path

FILE_PATH = "data/wonderland.txt"

BASENAME = os.path.basename(FILE_PATH)

# read the data

text = open(FILE_PATH, encoding="utf-8").read()

# remove caps, comment this code if you want uppercase characters as well

text = text.lower()

# remove punctuation

text = text.translate(str.maketrans("", "", punctuation))The above code reduces our vocabulary for better and faster training by removing upper case characters and punctuations as well as replacing two consecutive newlines with just one. If you wish to keep commas, periods and colons, just define your own punctuation string variable.

Let's print some statistics about the dataset:

# print some stats

n_chars = len(text)

vocab = ''.join(sorted(set(text)))

print("unique_chars:", vocab)

n_unique_chars = len(vocab)

print("Number of characters:", n_chars)

print("Number of unique characters:", n_unique_chars)Output:

unique_chars:

0123456789abcdefghijklmnopqrstuvwxyz

Number of characters: 154207

Number of unique characters: 39Now that we loaded and cleaned the dataset successfully, we need a way to convert these characters into integers, there are a lot of Keras and Scikit-Learn utilities out there for that, but we are going to make this manually in Python.

Since we have vocab as our vocabulary that contains all the unique characters of our dataset, we can make two dictionaries that map each character to an integer number and vice-versa:

# dictionary that converts characters to integers

char2int = {c: i for i, c in enumerate(vocab)}

# dictionary that converts integers to characters

int2char = {i: c for i, c in enumerate(vocab)}Let's save them to a file (to retrieve them later in text generation):

# save these dictionaries for later generation

pickle.dump(char2int, open(f"{BASENAME}-char2int.pickle", "wb"))

pickle.dump(int2char, open(f"{BASENAME}-int2char.pickle", "wb"))Now let's encode our dataset, in other words, we gonna convert each character into its corresponding integer number:

# convert all text into integers

encoded_text = np.array([char2int[c] for c in text])Since we want to scale our code for larger datasets, we need to use tf.data API for efficient dataset handling, as a result, let's create a tf.data.Dataset object on this encoded_text array:

# construct tf.data.Dataset object

char_dataset = tf.data.Dataset.from_tensor_slices(encoded_text)Awesome, now this char_dataset object has all the characters of this dataset; let's try to print the first characters:

# print first 5 characters

for char in char_dataset.take(8):

print(char.numpy(), int2char[char.numpy()])This will take the very first 8 characters and print them out along with their integer representation:

38

27 p

29 r

26 o

21 j

16 e

14 cGreat, now we need to construct our sequences, as mentioned earlier, we want each input sample to be a sequence of characters of the length sequence_length and the output of a single character that is the next one. Luckily for us, we have to use tf.data.Dataset's batch() method to gather characters together:

# build sequences by batching

sequences = char_dataset.batch(2*sequence_length + 1, drop_remainder=True)

# print sequences

for sequence in sequences.take(2):

print(''.join([int2char[i] for i in sequence.numpy()]))As you may notice, I used 2*sequence_length +1 size of each sample, and you'll see why I did that very soon. Check the output:

project gutenbergs alices adventures in wonderland by lewis carroll

this ebook is for the use of anyone anywhere at no cost and with

almost no restrictions whatsoever you may copy it give it away or

reuse it under the terms of the project gutenberg license included

with this ebook or online at wwwgutenbergorg

...

<SNIPPED>

You notice I've converted the integer sequences into normal text using int2char dictionary built earlier.

Now you know how each sample is represented, let's prepare our inputs and targets, we need a way to convert a single sample (sequence of characters) into multiple (input, target) samples. Fortunately, the flat_map() method is exactly what we need; it takes a callback function that loops over all our data samples:

def split_sample(sample):

# example :

# sequence_length is 10

# sample is "python is a great pro" (21 length)

# ds will equal to ('python is ', 'a') encoded as integers

ds = tf.data.Dataset.from_tensors((sample[:sequence_length], sample[sequence_length]))

for i in range(1, (len(sample)-1) // 2):

# first (input_, target) will be ('ython is a', ' ')

# second (input_, target) will be ('thon is a ', 'g')

# third (input_, target) will be ('hon is a g', 'r')

# and so on

input_ = sample[i: i+sequence_length]

target = sample[i+sequence_length]

# extend the dataset with these samples by concatenate() method

other_ds = tf.data.Dataset.from_tensors((input_, target))

ds = ds.concatenate(other_ds)

return ds

# prepare inputs and targets

dataset = sequences.flat_map(split_sample)To get a good understanding of how the above code works, let's take an example: Let's say we have a sequence length of 10 (too small but good for explanation), the sample argument is a sequence of 21 characters (remember the 2*sequence_length+1) encoded in integers, for convenience, let's imagine it isn't encoded, say it's "python is a great pro".

Now the first data sample we going to generate would be the following tuple of inputs and targets ('python is ', 'a'), the second is ('ython is a', ' '), the third is ('thon is a ', 'g') and so on. We do that on all samples, in the end, we'll see that we dramatically increased the number of training samples. We've used the ds.concatenate() method to add these samples together.

After we constructed our samples, let's one-hot encode both the inputs and the labels (targets):

def one_hot_samples(input_, target):

# onehot encode the inputs and the targets

# Example:

# if character 'd' is encoded as 3 and n_unique_chars = 5

# result should be the vector: [0, 0, 0, 1, 0], since 'd' is the 4th character

return tf.one_hot(input_, n_unique_chars), tf.one_hot(target, n_unique_chars)

dataset = dataset.map(one_hot_samples)We've used the convenient map() method to one-hot encode each sample on our dataset, tf.one_hot() method does what we expect. Let's try to print the first two data samples along with their shapes:

# print first 2 samples

for element in dataset.take(2):

print("Input:", ''.join([int2char[np.argmax(char_vector)] for char_vector in element[0].numpy()]))

print("Target:", int2char[np.argmax(element[1].numpy())])

print("Input shape:", element[0].shape)

print("Target shape:", element[1].shape)

print("="*50, "\n")Here is the output of the second element:

Input: project gutenbergs alices adventures in wonderland by lewis carroll

this ebook is for the use of an

Target: y

Input shape: (100, 39)

Target shape: (39,)So each input element has the shape of (sequence length, vocabulary size), in this case, there are 39 unique characters and 100 is the sequence length. The shape of the output is a one-dimensional vector that is one-hot encoded.

Note: If you're using a different dataset and/or using another character filtering mechanism, you'll see a different vocabulary size, each problem has its own domain. For instance, I also used this to generate Python code, it has 92 unique characters, that's because I should allow some punctuations that are necessary for Python code.

Finally, we repeat, shuffle and batch our dataset:

# repeat, shuffle and batch the dataset

ds = dataset.repeat().shuffle(1024).batch(BATCH_SIZE, drop_remainder=True)We set the optional drop_remainder to True so we can eliminate the remaining samples that have less size than BATCH_SIZE.

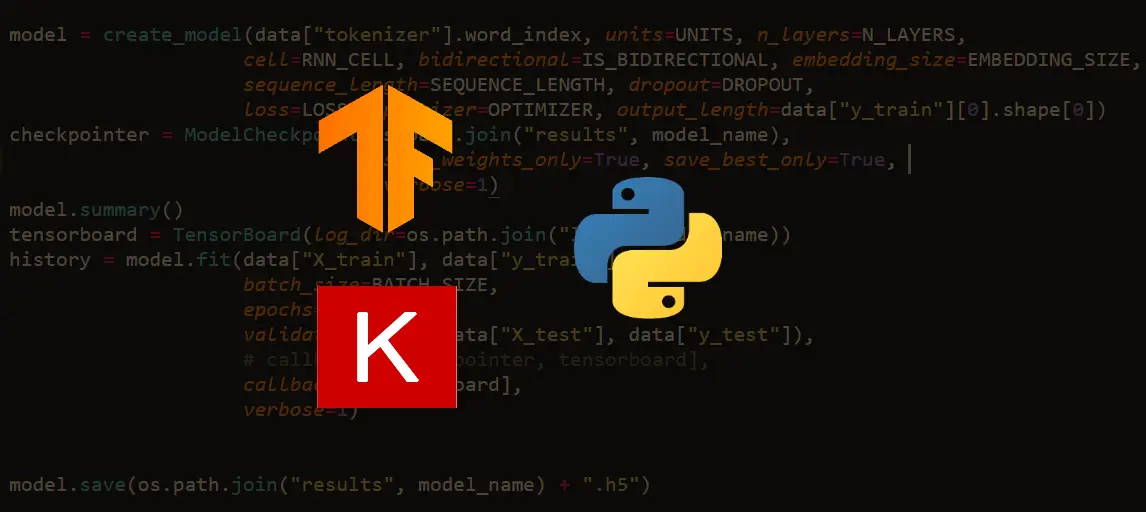

Building the Model

Now let's build the model, it has basically two LSTM layers with an arbitrary number of 128 LSTM units. Try to experiment with different model architectures, you're free to do whatever you want!

The output layer is a fully-connected layer with 39 units where each neuron corresponds to a character (probability of the occurrence of each character).

model = Sequential([

LSTM(256, input_shape=(sequence_length, n_unique_chars), return_sequences=True),

Dropout(0.3),

LSTM(256),

Dense(n_unique_chars, activation="softmax"),

])We're using Adam optimizer here, I suggest you experiment with different optimizers.

After we've built our model, let's print the summary and compile it:

# define the model path

model_weights_path = f"results/{BASENAME}-{sequence_length}.h5"

model.summary()

model.compile(loss="categorical_crossentropy", optimizer="adam", metrics=["accuracy"])Training the Model

Let's train the model now:

# make results folder if does not exist yet

if not os.path.isdir("results"):

os.mkdir("results")

# train the model

model.fit(ds, steps_per_epoch=(len(encoded_text) - sequence_length) // BATCH_SIZE, epochs=EPOCHS)

# save the model

model.save(model_weights_path)We fed the Dataset object that we prepared earlier, and since the model object has no idea on many samples are there in the dataset, we specified steps_per_epoch parameter, which is set to the number of training samples divided by the batch size.

After running the above code, it should start training, which gonna look something like this:

Train for 6473 steps

...

<SNIPPED>

Epoch 29/30

6473/6473 [==============================] - 486s 75ms/step - loss: 0.8728 - accuracy: 0.7509

Epoch 30/30

2576/6473 [==========>...................] - ETA: 4:56 - loss: 0.8063 - accuracy: 0.7678This will take few hours, depending on your hardware, try increasing batch_size to 256 for faster training.

After the training is over, a new file should appear in the results folder, that is, the model trained weights.

Generating New Text

Here comes the fun part, now we have successfully built and trained the model, how can we generate new text?

Let's import the necessary modules (If you're on a single notebook, you don't have to do that):

import numpy as np

import pickle

import tqdm

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, LSTM, Dropout, Activation

import os

sequence_length = 100

# dataset file path

FILE_PATH = "data/wonderland.txt"

# FILE_PATH = "data/python_code.py"

BASENAME = os.path.basename(FILE_PATH)We need a sample text to start generating. This will depend on your problem, you can take sentences from the training data in which it will perform better, but I'll try to produce a new chapter of this book:

seed = "chapter xiii"Let's load the dictionaries that map each integer to a character and vise-versa that we saved before in the data preparation phase:

# load vocab dictionaries

char2int = pickle.load(open(f"{BASENAME}-char2int.pickle", "rb"))

int2char = pickle.load(open(f"{BASENAME}-int2char.pickle", "rb"))

vocab_size = len(char2int)Building the model again:

# building the model

model = Sequential([

LSTM(256, input_shape=(sequence_length, vocab_size), return_sequences=True),

Dropout(0.3),

LSTM(256),

Dense(vocab_size, activation="softmax"),

])Now we need to load the optimal set of model weights:

# load the optimal weights

model.load_weights(f"results/{BASENAME}-{sequence_length}.h5")Let's start generating:

s = seed

n_chars = 400

# generate 400 characters

generated = ""

for i in tqdm.tqdm(range(n_chars), "Generating text"):

# make the input sequence

X = np.zeros((1, sequence_length, vocab_size))

for t, char in enumerate(seed):

X[0, (sequence_length - len(seed)) + t, char2int[char]] = 1

# predict the next character

predicted = model.predict(X, verbose=0)[0]

# converting the vector to an integer

next_index = np.argmax(predicted)

# converting the integer to a character

next_char = int2char[next_index]

# add the character to results

generated += next_char

# shift seed and the predicted character

seed = seed[1:] + next_char

print("Seed:", s)

print("Generated text:")

print(generated)All we are doing here is starting with a seed text, constructing the input sequence, and then predicting the next character. After that, we shift the input sequence by removing the first character and adding the last character predicted. This gives us a slightly changed sequence of inputs that still has a length equal to the size of our sequence length.

We then feed this updated input sequence into the model to predict another character. Repeating this process N times will generate a text with N characters.

Here is an interesting text generated:

Seed: chapter xiii

Generated Text:

ded of and alice as it go on and the court

well you wont you wouldncopy thing

there was not a long to growing anxiously any only a low every cant

go on a litter which was proves of any only here and the things and the mort meding and the mort and alice was the things said to herself i cant remeran as if i can repeat eften to alice any of great offf its archive of and alice and a cancur as the moThat is clearly English! But as you may notice, most of the sentences don't make any sense, that is due to many reasons. One of the main reasons is that the dataset is trained only on very few samples. Also, the model architecture isn't optimal, other state-of-the-art architectures (such as GPT-2 and BERT) tend to outperform this one drastically.

Note though, this is not limited to English text, you can use whatever type of text you want. In fact, you can even generate Python code once you have enough lines of code.

Conclusion

Great, we are done. Now you know how to:

- Make RNNs in TensorFlow and Keras as generative models.

- Cleaning text and building TensorFlow input pipelines using tf.data API.

- Training LSTM network on text sequences.

- Tuning the performance of the model.

In order to further improve the model, you can:

- Reduce the vocabulary size by removing rare characters.

- Add more LSTM and Dropout layers with more LSTM units, or even add Bidirectional layers.

- Tweak some hyperparameters such as batch size, optimizer, and even

sequence_length, and see which works best. - Train on more epochs.

- Use a larger dataset.

Check the full code here.

Learn also: How to Perform Text Summarization using Transformers in Python.

Happy Training ♥

Ready for more? Dive deeper into coding with our AI-powered Code Explainer. Don't miss it!

View Full Code Fix My Code

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!