Kickstart your coding journey with our Python Code Assistant. An AI-powered assistant that's always ready to help. Don't miss out!

Transformer models have been showing incredible results in most of the tasks in the natural language processing field. The power of transfer learning combined with large-scale transformer language models has become a standard in state-of-the-art NLP.

One of the most significant milestones in the evolution of NLP is the release of Google's BERT model in late 2018, which is known as the beginning of a new era in NLP.

In this tutorial, we will take you through an example of fine-tuning BERT (and other transformer models) for text classification using the Huggingface Transformers library on the dataset of your choice.

Please note that this tutorial is about fine-tuning the BERT model on a downstream task (such as text classification). If you want to train BERT from scratch, that's called pre-training; this tutorial will definitely help you.

We'll be using the 20 newsgroups dataset as a demo for this tutorial; it is a dataset that has about 18,000 news posts on 20 different topics. If you have a custom dataset for classification, you can follow along as well, as you should make very few changes. For example, I've implemented this tutorial on fake news detection, and it works great.

You can also fine-tune BERT for any downstream task, such as semantic textual similarity, check this tutorial if you want to do that.

To get started, let's install the Huggingface transformers library along with others:

$ pip install transformers numpy torch sklearnOpen up a new notebook/Python file and import the necessary modules:

import torch

from transformers.file_utils import is_tf_available, is_torch_available, is_torch_tpu_available

from transformers import BertTokenizerFast, BertForSequenceClassification

from transformers import Trainer, TrainingArguments

import numpy as np

import random

from sklearn.datasets import fetch_20newsgroups

from sklearn.model_selection import train_test_splitNext, let's make a function to set seed so we'll have the same results in different runs:

def set_seed(seed: int):

"""

Helper function for reproducible behavior to set the seed in ``random``, ``numpy``, ``torch`` and/or ``tf`` (if

installed).

Args:

seed (:obj:`int`): The seed to set.

"""

random.seed(seed)

np.random.seed(seed)

if is_torch_available():

torch.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

# ^^ safe to call this function even if cuda is not available

if is_tf_available():

import tensorflow as tf

tf.random.set_seed(seed)

set_seed(1)As mentioned earlier, we'll be using the BERT model. More specifically, we'll be using bert-base-uncased pre-trained weights from the library. Again, if you wish to pre-train using your large dataset, this tutorial should help you do that.

Also, we'll be using max_length of 512:

# the model we gonna train, base uncased BERT

# check text classification models here: https://huggingface.co/models?filter=text-classification

model_name = "bert-base-uncased"

# max sequence length for each document/sentence sample

max_length = 512max_length is the maximum length of our sequence. In other words, we'll be picking only the first 512 tokens from each document or post, and you can always change it to whatever you want. However, if you increase it, make sure it fits your memory during the training, even when using a smaller batch size.

Learn also: Conversational AI Chatbot with Transformers in Python.

Loading the Dataset

Next, let's download and load the tokenizer responsible for converting our text to sequences of tokens:

# load the tokenizer

tokenizer = BertTokenizerFast.from_pretrained(model_name, do_lower_case=True)We also set do_lower_case to True to make sure we lowercase all the text (remember, we're using the uncased model).

The below code downloads and loads the dataset:

def read_20newsgroups(test_size=0.2):

# download & load 20newsgroups dataset from sklearn's repos

dataset = fetch_20newsgroups(subset="all", shuffle=True, remove=("headers", "footers", "quotes"))

documents = dataset.data

labels = dataset.target

# split into training & testing a return data as well as label names

return train_test_split(documents, labels, test_size=test_size), dataset.target_names

# call the function

(train_texts, valid_texts, train_labels, valid_labels), target_names = read_20newsgroups()Each of train_texts and valid_texts is a list of documents (list of strings) for training and validation sets, respectively, the same for train_labels and valid_labels, each of them is a list of integers or labels ranging from 0 to 19. target_names is a list of our 20 labels each has its own name.

Now let's use our tokenizer to encode our corpus:

# tokenize the dataset, truncate when passed `max_length`,

# and pad with 0's when less than `max_length`

train_encodings = tokenizer(train_texts, truncation=True, padding=True, max_length=max_length)

valid_encodings = tokenizer(valid_texts, truncation=True, padding=True, max_length=max_length)We set truncation to True so that we eliminate tokens that go above max_length, we also set padding to True to pad documents that are less than max_length with empty tokens.

The below code wraps our tokenized text data into a torch Dataset:

class NewsGroupsDataset(torch.utils.data.Dataset):

def __init__(self, encodings, labels):

self.encodings = encodings

self.labels = labels

def __getitem__(self, idx):

item = {k: torch.tensor(v[idx]) for k, v in self.encodings.items()}

item["labels"] = torch.tensor([self.labels[idx]])

return item

def __len__(self):

return len(self.labels)

# convert our tokenized data into a torch Dataset

train_dataset = NewsGroupsDataset(train_encodings, train_labels)

valid_dataset = NewsGroupsDataset(valid_encodings, valid_labels)Since we gonna use Trainer from Transformers library, it expects our dataset as a torch.utils.data.Dataset, so we made a simple class that implements the __len__() method that returns the number of samples, and __getitem__() method to return a data sample at a specific index.

Training the Model

Now that we have our data prepared, let's download and load our BERT model and its pre-trained weights:

# load the model and pass to CUDA

model = BertForSequenceClassification.from_pretrained(model_name, num_labels=len(target_names)).to("cuda")We're using BertForSequenceClassification class from Transformers library, we set num_labels to the length of our available labels, in this case, 20.

We also cast our model to our CUDA GPU. If you're on CPU (not suggested), then just delete to() method.

Before we start fine-tuning our model, let's make a simple function to compute the metrics we want. In this case, accuracy:

from sklearn.metrics import accuracy_score

def compute_metrics(pred):

labels = pred.label_ids

preds = pred.predictions.argmax(-1)

# calculate accuracy using sklearn's function

acc = accuracy_score(labels, preds)

return {

'accuracy': acc,

}You're free to include any metric you want, I've included accuracy, but you can add precision, recall, etc.

The below code uses TrainingArguments class to specify our training arguments, such as the number of epochs, batch size, and some other parameters:

training_args = TrainingArguments(

output_dir='./results', # output directory

num_train_epochs=3, # total number of training epochs

per_device_train_batch_size=8, # batch size per device during training

per_device_eval_batch_size=20, # batch size for evaluation

warmup_steps=500, # number of warmup steps for learning rate scheduler

weight_decay=0.01, # strength of weight decay

logging_dir='./logs', # directory for storing logs

load_best_model_at_end=True, # load the best model when finished training (default metric is loss)

# but you can specify `metric_for_best_model` argument to change to accuracy or other metric

logging_steps=400, # log & save weights each logging_steps

save_steps=400,

evaluation_strategy="steps", # evaluate each `logging_steps`

)Each argument is explained in the code comments. I've specified 8 as training batch size; that's because it's the maximum I can get to fit in a Google Colab environment's memory. If you have the CUDA out of memory error, make sure to decrease it furthermore. If you have a more powerful GPU in your environment, then increasing it will make the training significantly faster.

You can also tweak other parameters, such as increasing the number of epochs for better training.

I've set the logging_steps and save_steps to 400, which means it will evaluate and save the model after every 400 steps, make sure to increase it when you decrease the batch size lower than 8, that's because it'll save a lot of checkpoints after every few steps, and may take your whole environment disk space.

We then pass our training arguments, dataset, and compute_metrics callback to our Trainer:

trainer = Trainer(

model=model, # the instantiated Transformers model to be trained

args=training_args, # training arguments, defined above

train_dataset=train_dataset, # training dataset

eval_dataset=valid_dataset, # evaluation dataset

compute_metrics=compute_metrics, # the callback that computes metrics of interest

)Training the model:

# train the model

trainer.train()This will take several minutes/hours depending on your environment, here's my output on Google Colab:

***** Running training *****

Num examples = 15076

Num Epochs = 3

Instantaneous batch size per device = 8

Total train batch size (w. parallel, distributed & accumulation) = 8

Gradient Accumulation steps = 1

Total optimization steps = 5655

[5655/5655 4:12:58, Epoch 3/3]

Step Training Loss Validation Loss Accuracy

400 1.873200 1.402060 0.606631

800 1.244500 1.086071 0.680106

1200 1.104400 1.077154 0.670557

1600 0.996500 0.955149 0.709284

2000 0.811500 0.921275 0.729708

2400 0.799500 0.916478 0.731034

2800 0.683500 0.881080 0.747480

3200 0.689100 0.861604 0.754642

3600 0.612900 0.873568 0.757294

4000 0.419000 0.895653 0.767639

4400 0.420800 0.911871 0.773210

4800 0.457800 0.900206 0.769496

5200 0.360400 0.893304 0.778780

5600 0.334600 0.888858 0.778249As you can see, the validation loss is gradually decreasing, and the accuracy increased to over 77.8%.

Remember we set load_best_model_at_end to True, this will automatically load the best-performed model when finished training, let's make sure with evaluate() method:

# evaluate the current model after training

trainer.evaluate()This will take several seconds to output something like this:

{'epoch': 3.0,

'eval_accuracy': 0.7758620689655172,

'eval_loss': 0.80070960521698}Now that we trained our model, let's save it for inference later:

# saving the fine tuned model & tokenizer

model_path = "20newsgroups-bert-base-uncased"

model.save_pretrained(model_path)

tokenizer.save_pretrained(model_path)Performing Inference

Now we have a trained model on our dataset, let's try to have some fun with it!

The below function takes a text as a string, tokenizes it with our tokenizer, calculates the output probabilities using softmax function, and returns the actual label:

def get_prediction(text):

# prepare our text into tokenized sequence

inputs = tokenizer(text, padding=True, truncation=True, max_length=max_length, return_tensors="pt").to("cuda")

# perform inference to our model

outputs = model(**inputs)

# get output probabilities by doing softmax

probs = outputs[0].softmax(1)

# executing argmax function to get the candidate label

return target_names[probs.argmax()]Here's an example:

# Example #1

text = """

The first thing is first.

If you purchase a Macbook, you should not encounter performance issues that will prevent you from learning to code efficiently.

However, in the off chance that you have to deal with a slow computer, you will need to make some adjustments.

Having too many background apps running in the background is one of the most common causes.

The same can be said about a lack of drive storage.

For that, it helps if you uninstall xcode and other unnecessary applications, as well as temporary system junk like caches and old backups.

"""

print(get_prediction(text))Output:

comp.sys.mac.hardwareAs expected, we're talking about Macbooks. Here's a second example:

# Example #2

text = """

A black hole is a place in space where gravity pulls so much that even light can not get out.

The gravity is so strong because matter has been squeezed into a tiny space. This can happen when a star is dying.

Because no light can get out, people can't see black holes.

They are invisible. Space telescopes with special tools can help find black holes.

The special tools can see how stars that are very close to black holes act differently than other stars.

"""

print(get_prediction(text))Output:

sci.spaceThis is a label of science -> space, as expected!

Yet another example:

# Example #3

text = """

Coronavirus disease (COVID-19) is an infectious disease caused by a newly discovered coronavirus.

Most people infected with the COVID-19 virus will experience mild to moderate respiratory illness and recover without requiring special treatment.

Older people, and those with underlying medical problems like cardiovascular disease, diabetes, chronic respiratory disease, and cancer are more likely to develop serious illness.

"""

print(get_prediction(text))Output:

sci.medConclusion

In this tutorial, you've learned how you can train the BERT model using Huggingface Transformers library on your dataset.

Note that, you can also use other transformer models, such as GPT-2 with GPT2ForSequenceClassification, RoBERTa with GPT2ForSequenceClassification, DistilBERT with DistilBERTForSequenceClassification, and much more. Please head to the official documentation for a list of available models.

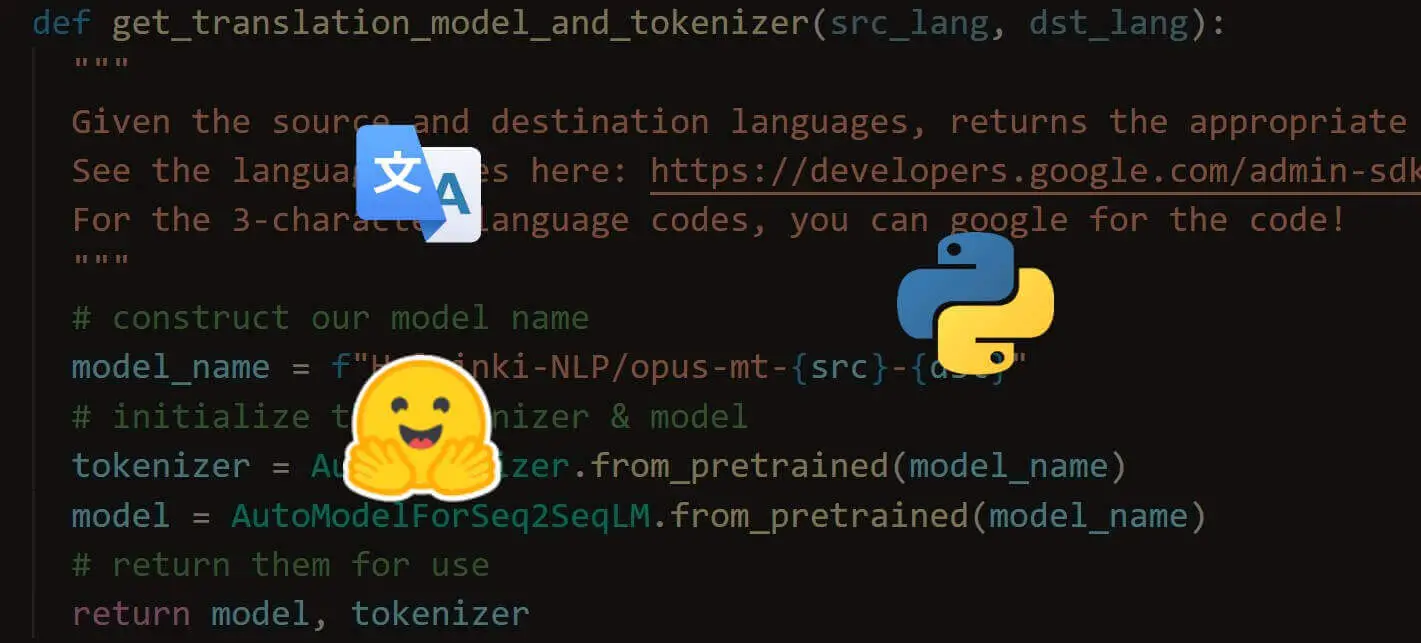

Also, if your dataset is in a language other than English, make sure you pick the weights for your language, this will help a lot during training. Check this link and use the filter to get the model weights you need.

Learn also: How to Perform Text Summarization using Transformers in Python.

Save time and energy with our Python Code Generator. Why start from scratch when you can generate? Give it a try!

View Full Code Create Code for Me

Got a coding query or need some guidance before you comment? Check out this Python Code Assistant for expert advice and handy tips. It's like having a coding tutor right in your fingertips!